Could technology inspired by artificial intelligence help general aviation pilots to carry out flights more safely? Developers are working on products which could aid pilots of small aircraft by acting as another pair of eyes in the cockpit, helping to identify other traffic, landing sites and providing guidance for landing.

Dr Luuk van Dijk came into the field of aviation software after working for Google and SpaceX and is keen to bring innovation to general aviation. He founded autonomous flight control company Daedalean in August 2016 in Zürich with his ex-Google colleague Anna Chernova, who is also a pilot.

Tip: Find out more about Daedalean here.

“After having seen SpaceX and looking at the state of avionics in general civil aviation, I was taken aback by how manual flying still is, well into the 2nd decade of the 21st century,” van Dijk said. “The certification barriers and the low volumes compared to automotive and consumer systems make it very difficult to truly innovate. That a Piper Super Cub designed in 1949 with nothing but the mandatory minimal instrumentation is as legal and formally as safe to fly as the most technology-packed glass cockpit equipped modern GA aircraft is not a testimony to innovation in avionics.”

Recent incidents of light aircraft being involved in mid-air collisions have shown that, while overall incredibly safe, there is always more to be done to improve safety in aviation.

Together with partner Avidyne, Daedalean is using machine learning, or neural networks, to create computer vision systems that can give a pilot a complete picture of the situation in the air and below. Unlike humans, the systems can monitor the whole sky and, unlike currently, they can also identify potential threats that aren’t equipped with transponders, such as birds, balloons and gliders.

Avidyne’s experimental Cessna with Daedalean systems on board. Credit: Daedalean

While the technology could eventually be used in autonomous aircraft, such as flying taxis, the immediate aim is to reduce the workload on pilots today, with the software acting as a helpful co-pilot, whether in fixed-wing aircraft or helicopters.

“They lower the cognitive load on the pilots by giving more situational context,” van Dijk declares. “For example, you can use the visual landing guidance to get a better approach without being overloaded… It should give you slightly more peace of mind that there’s nothing out there that you forgot to see.”

Is it a bird? Is it a plane?

Machine learning can teach computers tasks that are relatively easy for humans. For example, we are all familiar with those captchas asking which images contain buses, or traffic lights, designed to filter out bots.

“As a simple example, I show you a 30 by 30 picture of even black and white pixels. And I ask you, is this a cat? Or is this an airplane?” van Dijk explains in an interview with AeroTime.

Visual traffic detection. Credit: Daedalean

It’s only in the last 10-15 years that machine learning techniques have made it possible for computers to begin answering these kinds of questions, thus giving rise to the computer vision technology that could be used in aircraft.

“What we can do in airspace, is we can recognize things that are flying that we should not fly into, which is very important,” van Dijk says. “And then we can recognize safe places to land, which is also very important. We can find a runway just because it looks like a runway, just like a human does. And then we can see is that runway free? Or is it obstructed? These are things that are going to be very hard to program the classical way. But with these machine learning techniques, that becomes feasible.”

What is machine learning technology and is it safe to apply it within aviation?

So, what can the technology do now?

Currently, Daedalean has a system that can do three main tasks, using computer vision and cameras. It works in visual flight conditions (VMC), but the next step is for it to be used in instrument conditions too, where cloud and other weather obscures the view of pilots (IMC).

The systems can position and navigate without GPS, detect and track traffic (without using current ADS-B systems) and provide landing guidance, such as recognizing runways or helipads. Not relying on GPS is an innovative step because GPS signals can be switched off or disrupted by countries, as has been reported in Europe this year after Russia’s invasion of Ukraine.

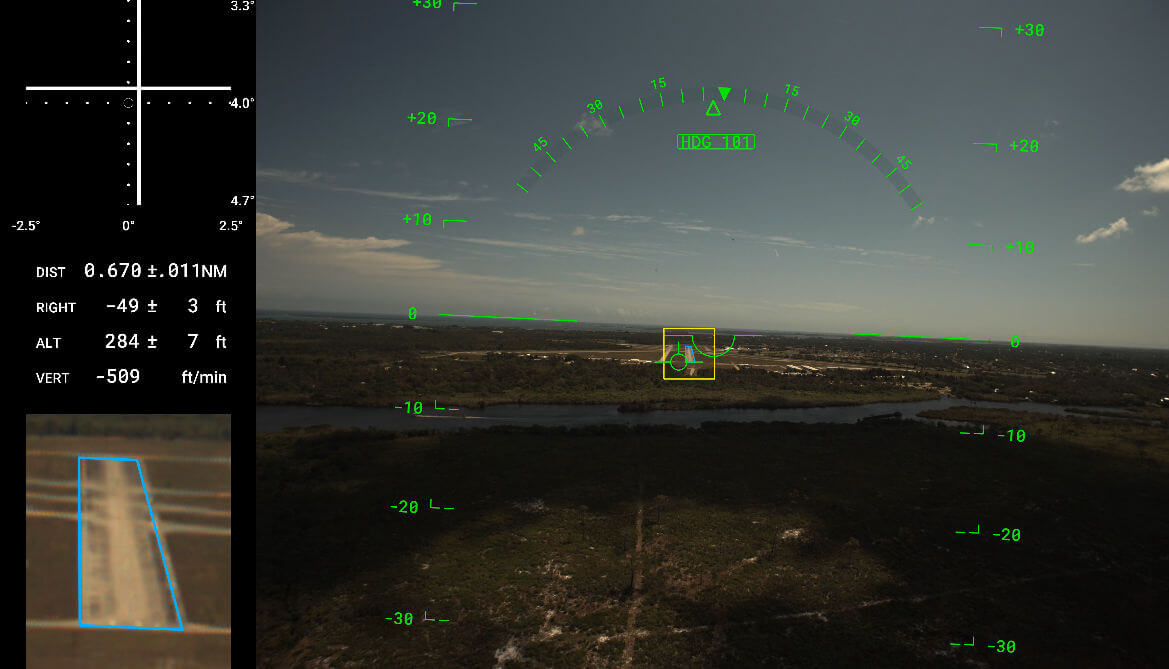

Daedalean visual landing system. Credit: Daedalean

Van Dijk said Daedalean’s aim was not to develop just another system that competes for a pilot’s attention, the idea is to ease the workload.

A demonstrator kit is already available for general aviation operators and pilots who want to see the technology with their own eyes and get used to what it can do before the actual certified products are available.

Describing the traffic detection system, which is expected to be the first product to market, van Dijk says: “It sees things by the time they’re roughly 10 by 10 pixels, which translates for a Cessna in 3-4 nautical miles out.”

When it comes to landing guidance, the software can spot a rectangle in the camera picture when the runway roughly is. “When we get a bit closer, we can make out the geometry and we can distill our deviation from the centerline and the pre-selected glideslope,” van Dijk says.

The software can then give guidance that pilots can follow to touch down. The aim is that eventually the cameras and software will feed into autoland systems, allowing aircraft, whether light aircraft or flying taxis, to carry out landings without pilots manipulating the controls.

What tasks can machine learning carry out that the current instruments can’t?

The certification puzzle

As anyone in aviation knows, coming up with a new product is one thing, getting it certified for use is another ball game.

“In 2017, we went to EASA to ask them what they would say if we showed up with a machine learning component in the avionics system. And they said, “We would tell you to go away,” remembers van Dijk.

Machine Learning (ML) and Neural Networks (NN) can’t be checked and certified line by line like traditional software, which is subject to DO-178 certification.

As van Dijk puts it, it wasn’t a case of building a system and proving that it works. “In this case, we also have to develop the theory of how you demonstrate at all that A) the system is a fit for purpose, and B) has no unintended function.”

Much hard work, studies and research later, and both EASA and its US counterpart, the FAA, have roadmaps in place for how to ensure the safety of AI/machine-learning components and therefore certify them for use in aviation.

Daedalean’s first market product will be Pilot Eye, a traffic detection product, which will be integrated with the flight displays (PFD/MFDs) made by partner Avidyne. Certification is expected in mid-2023.

Landing Avidyne’s experimental Cessna. Credit Daedalean

The trademark is owned by Avidyne, which is therefore the one applying for supplemental type certificate (STC) with EASA and the FAA. Daedalean is also in the process of becoming a design organization itself, which will allow it to submit products for certification in the future.

The STC project is in process with the FAA and EASA together, who are planning to align their approach on the first-ever certification for this type of flight-control-related product. Daedalean says it will be a DAL-C product, or, in the terminology of EASA’s AI roadmap, Level 1 (‘assistance to human’).

“It’s our beachhead into certified land because if the theory works, and the FAA accepts this as a means of compliance, then we’ve moved the entire industry, in all modesty, to a new generation of potential avionics,” van Dijk said.

Why would General Aviation aircraft owners and operators want to add this new technology to the flight deck?

Urban air mobility

While it is expected that operators of GA fleets will be most interested in the technology now to improve safety by reducing the workloads on pilots, as well as reducing costs by making flying more efficient, van Dijk sees the technology as playing a crucial role in enabling urban air mobility.

Integrating new vehicles such as electric air taxis is widely seen as a challenge for the emerging UAM industry. Van Dijk says the idea of having hundreds of air taxis buzzing about over cities like London is a bit “naive” because it will be up to air traffic control to keep everyone separated and they can only handle so much at once, especially in IFR conditions.

NASA researchers have even proposed a new operating mode – digital flight rules, to sit alongside visual and instrument flight rules (VFR and IFR) to keep everyone safely separated in the air as we move to new ways of flying.

“If you want to have hundreds, you’re going to have the computers look at each other, talk to each other and stay out of each other’s way without overloading the ATC system,” van Dijk predicts.

That’s where machine-learning based systems will come in. While the technology can currently be used as an aid to situational awareness for piloted flight, eventually it will become integrated with the autopilot, start communicating with other aircraft and the ground and then move to full autonomy.